Secure Your Digital Footprint: Protect ChatGPT Privacy Risks

- bakhshishsingh

- Nov 3, 2025

- 3 min read

Generative AI tools like ChatGPT have redefined productivity — but they’ve also introduced new privacy risks. Recently, shared ChatGPT conversations began surfacing on Google Search, exposing personal and sometimes sensitive information to the public.

This incident underscores a critical truth of modern digital life: privacy is no longer a default — it’s a choice.

At Allendevaux & Company, we help individuals and organizations navigate these risks by combining AI governance, data-protection strategy, and cyber hygiene. Here’s what happened, why it matters, and what you can do right now to secure your digital footprint.

What Happened?

OpenAI’s “Share” feature — designed to make conversations easily accessible via link — inadvertently made those links discoverable by search engines.

That means if you’ve ever clicked “Share” to send a conversation, it might have been indexed by Google or Bing. Some indexed chats included:

Real names and contact information

Emails and resumes

Confidential disclosures such as health or employment issues

Even more concerning, a few public links contained illegal or highly sensitive topics now visible to anyone with a search query.

Why It’s a Big Deal

This isn’t just about data visibility — it’s about data permanence. Once content is crawled by search engines, it can be cached, mirrored, or shared beyond the original platform’s control.

The potential consequences include:

Doxxing: Exposing personal details online

Harassment and blackmail from bad actors

Reputational damage for professionals and organizations

Legal or compliance risks, especially in regulated industries

OpenAI has since disabled discoverability of shared chats, but that doesn’t undo the damage already done. Those indexed pages may still exist, cached on third-party sites or visible in search results.

OpenAI’s Response (and Its Limits)

In response to public concern, OpenAI limited sharing features and initiated cleanup requests to search engines. However, it’s important to understand that the responsibility to secure your data remains with you.

The platform’s corrective actions include:

Restricting the “Share” feature

Beginning de-indexing of existing shared URLs

Still, OpenAI cannot guarantee the removal of every cached link. That’s why individual users must take proactive steps to delete and report any exposed chats.

How to Check and Remove Exposed ChatGPT Links

Even if you think you haven’t shared anything sensitive, it’s worth auditing your ChatGPT account. Follow these two essential steps:

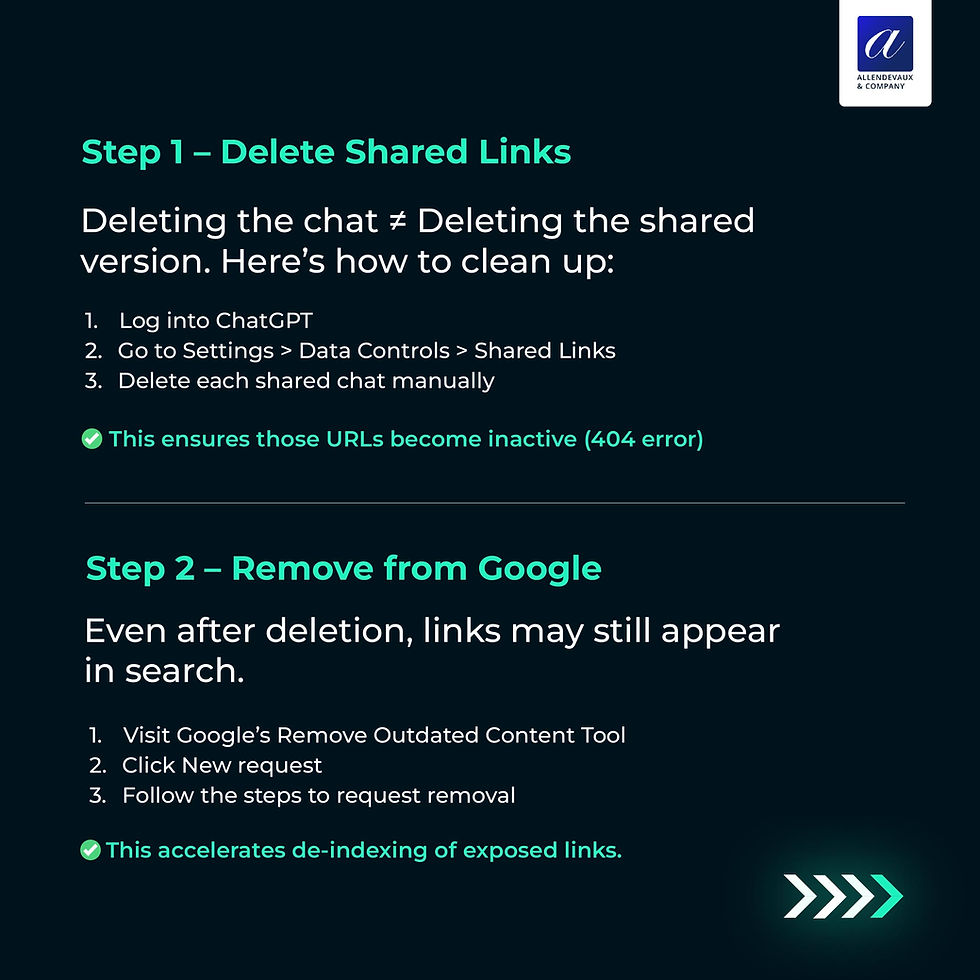

Step 1 – Delete Shared Links

Deleting a chat inside ChatGPT does not remove the shared version. You must delete each shared link manually.

Log into your ChatGPT account.

Go to Settings › Data Controls › Shared Links.

Delete all active shared chats individually.

Step 2 – Request Google Removal

Even after deletion, search results may persist temporarily. To speed up de-indexing:

Visit Google’s Remove Outdated Content Tool.

Click New Request.

Paste the exposed link and follow the on-screen instructions.

Within a few days, these URLs should become inactive (showing a 404 error) and gradually disappear from search results.

Taking Back Control of Your Privacy

The ChatGPT incident is a reminder that data-sharing features require careful management. Privacy isn’t just about secrecy — it’s about control. You can protect yourself by following these best practices:

Audit Shared Data: Review what information you’ve shared across platforms.

Delete Exposed Links: Remove public or unnecessary content immediately.

Monitor Your Digital Footprint: Regularly search your name, email, or company to identify unwanted exposure.

Establish AI Use Policies: For organizations, define internal guidelines on using generative AI safely and compliantly.

The Bigger Lesson

AI-driven platforms are powerful, but their convenience can blur the line between private and public. Every shared chat, file, or prompt could become a digital record — one that persists long after you hit “delete.”

At Allendevaux & Company, we help businesses develop AI governance frameworks that integrate privacy, ethics, and compliance from the start. Whether you’re an individual user or an enterprise adopting AI tools, proactive data-protection measures are essential for maintaining trust and minimizing risk.

Take control of your privacy before someone else does.

Visit www.allendevaux.com to learn how our experts can help you secure your digital footprint and establish responsible AI-usage policies.

Comments